Video taken for the 2018 Telecom infra Project summit in London.

Tuesday, December 4, 2018

Wednesday, November 7, 2018

The edge computing and access virtualization opportunity

Have you ever tried to edit a presentation online, without downloading it? Did you try to change a diagram or the design of a slide and found it maddening? It is slow to respond, the formatting and alignment are wrong… you ended up downloading it to edit it locally?

Have you ever had to upload a very important and large file? I am talking about tens of gigabytes. The video of your marriage or the response to a commercial tender that necessitated hundreds of hours of work? Did you then look at that progress bar slowly creeping up or the frustratingly revolving hourglass spinning for minutes on hand?

Have you ever bought the newest, coolest console game only to wait for the game to update, download and install for 10, 20, 30 minutes?

These are a few examples of everyday occurrences, which are so banal that they are part of our everyday experience. We live through them accepting the inherent frustration because these services are still a progress over the past.

True, the cloud has brought us a new range of experiences, new services and a great increase in productivity. With its ubiquity, economy of scale and seemingly infinite capacity, the cloud offers an inexpensive, practical and scalable way to offer global services.

So why are we still spending so much money on phones, computers, game consoles,… if most of the intelligence can be in the cloud and just displayed on our screens?

The answer is complex. We value as well immediacy, control, and personalization. Attributes that cloud services struggle to provide all at once. Immediacy is simple; we do not like to wait. That is why even though it might be more practical or economical storing all content or services on mega data centers on the other side of the planet; we are not willing to wait for our video to start, for our search to display, for our multiplayer game to react…

Control is more delicate. Privacy, security, regulatory mandates are difficult to achieve in a hyper-distributed, decentralized internet. That is why even though we trust our online storage account, we still store file on our computer’s hard drive, pictures on our phone, and game saves in our console.

Personalization is even more elusive. Cloud services do a great job of understanding our purchase history, viewing, likes etc… but there still seems to be a missing link between these services and the true context when you are at home teleworking and you want to make sure your video conference is going to be smooth while your children play video games on the console and live streaming a 4K video.

As we can see, there are still services and experiences that are not completely satisfied by the cloud. For these we keep relying on expensive devices at home or at work and accept the limitations of today’s technologies.

Edge computing and service personalization is a Telefonica Networks Innovation project that promises to solve these issues, bringing the best of the cloud and on premise to your services.

The idea is to distribute further the cloud to Telefonica’s data centers and to deploy these closer to the users. Based on the Unica concepts of network virtualization, applied to our access networks (mobile, fiber residential and enterprise), edge computing allows to deploy services, content and intelligence a few milliseconds away from your computer, your phone or your console.

How does it work? It is simple. A data center is deployed in our central office, based on open architecture and interfaces, allowing to deploy our traditional TV, fixed and mobile telephony and internet residential and corporate services. Then, since the infrastructure is virtualized and open, it allows to rapidly deploy third party services, from your favorite game provider, to your trusted enterprise office applications or your mobile apps. Additionally, the project has virtualized, disaggregated and virtualized part of our access networks (OLT for the fiber, baseband unit for the mobile, WAN for the enterprise), and radically simplified it.

The result is what is probably the world’s first multi access edge computing platform on residential, enterprise and mobile access that is completely programmable. It allow us for the first time to provide a single transport, a single range of service to all our customers, where we differentiate only the access.

What does it change? Pretty much everything. All of sudden, you can upload a large 1GB file to your personal storage in 6 seconds instead of the 5 minutes it took on the cloud. You can play your favorite multiplayer game online without console. You can edit this graphic file online without having to download it. …And these are just existing services that are getting better. We are also looking at new experiences that will surprise you. Stay tuned!

Thursday, October 4, 2018

Telefonica and the edge computing opportunity

Real world use cases of edge Computing With Intel, Affirmed Networks and Telefonica

Thursday, July 19, 2018

How Telefonica uses AI / ML to connect the unconnected

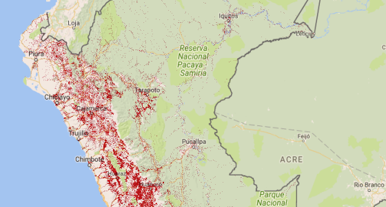

This presentation details how Telefonica has been using data science to systematically identify and locate the unconnected and to evolve its networks and operations to sustainably bring connectivity to the most parts of Latin America.

The project Internet para Todos is Telefonica's flagship program to connect the unconnected in LatAm. There are today more than 100 million people who live outside of reliable internet connectivity in the Telefonica footprint. The reasons are multiple, ranging from geography, population density and socio-economical conditions.

Fixed and mobile networks have historically been designed for maximum efficiency in dense, urban environments. Deploying these technologies in remote, low density, rural areas is possible but inefficient, which challenges the financial sustainability of the model.

To deliver internet in these environments in a sustainable manner, it is necessary to increase efficiency through systematic cost reduction, investment optimization and targeted deployments.

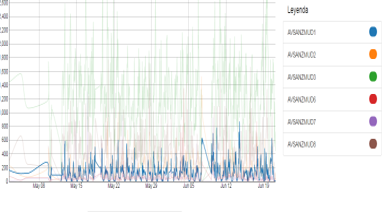

Systematic optimization necessitates continuous measurement of the financial, operational, technological and organizational data sets.

1.Finding the unconnected

2. Optimizing transport

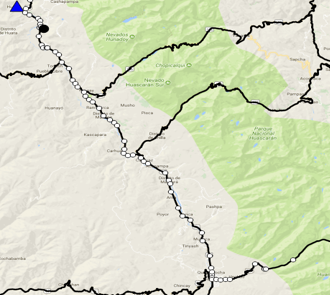

The team started with adding road and infrastructure data to the model form public sources, and used graph generation to cluster population settlements. Graph analysis (shortest path, Steiner tree) yielded population density-optimized transport routes.

3. AI to optimize network operations

I think that the type of data driven approach to complex problem solving demonstrated in this project is the key to network operators' sustainability in the future.

It is not only a rural problem, it is necessary to increase efficiency and optimize deployment and operations to keep decreasing the costs.

Friday, June 1, 2018

Friday, March 9, 2018

Subscribe to:

Posts (Atom)