As is now customary, the "big 5" European operators behind open RAN release their updated requirements to the market, indicating to vendors where they should direct their roadmaps to have the most chances to be selected in these networks.

As per previous iterations, I find it useful to compare and contrast the unanimous and highest priority requirements as indications of market maturity and directions. Here is my read on this year's release:

Scenarios:

As per last year, the big 5 unanimously require support for O-RU and vDU/CU with open front haul interface on site for macro deployments. This indicates that although the desire is to move to a disaggregated implementation, with vDU / CU potentially moving to the edge or the cloud, all the operators are not fully ready for these scenario and prioritize first a deployment like for like of a traditional gnodeB with a disaggregated virtualized version, but all at the cell site.

Moving to the high priority scenarios requested by a majority of operators, vDU/vCU in a remote site with O-RU on site makes its appearance, together for RAN sharing. Both MORAN and MOCN scenarios are desirable, the former with shared O-RU and dedicated vDU/vCU and the latter with shared O-RU, vDU and optionally vCU. In all cases, RAN sharing management interface is to be implemented to allow host and guest operators to manage their RAN resource independently.

Additional high priority requirements are the support for indoor and outdoor small cells. Indoor sharing O-RU and vDU/vCU in multi operator environments, outdoors with single operator with O-RU and vDU either co-located on site or fully integrated with Higher Layer Split. The last high priority requirement is for 2G /3G support, without indication of architecture.

Security:

The security requirements are sensibly the same as last year, freely adopting 3GPP requirements for Open RAN. The polemic around Open RAN's level of security compared to other cloud virtualized applications or traditional RAN architecture has been put to bed. Most realize that open interfaces inherently open more attack surfaces, but this is not specific to Open RAN, every cloud based architecture has the same drawback. Security by design goes a long way towards alleviating these concerns and proper no trust architecture can in many cases provide a higher security posture than legacy implementations. In this case, extensive use of IPSec, TLS 1.3, certificates at the interfaces and port levels for open front haul and management plane provide the necessary level of security, together with the mTLS interface between the RICs. The O-Cloud layer must support Linux security features, secure storage, encrypted secrets with external storage and management system.

CaaS:

As per last year, the cloud native infrastructure requirements have been refined, including Hardware Accelerator (GPU, eASIC) K8 support, Block and Object Storage for dedicated and hyper converged deployments, etc... Kubernetes infrastructure discovery, deployment, lifecycle management and cluster configuration has been further detailed. Power saving specific requirements have been added, at the Fan, CPU level with SMO driven policy and configuration and idle mode power down capabilities.

CU / DU:

CU DU interface requirements remain the same, basically the support for all open RAN interfaces (F1, HLS, X2, Xn, E1, E2, O1...). The support for both look aside and in-line accelerator architecture is also the highest priority, indicating that operators havent really reached a conclusion for a preferable architecture and are mandating both for flexibility's sake (In other words, inline acceleration hasn't convinced them that it can efficiently (cost and power) replace look aside). Fronthaul ports must support up to 200Gb by 12 x 10/25Gb combinations and mid haul up to 2 x 100Gb. Energy efficiency and consumption is to be reported for all hardware (servers, CPUs, fans, NIC cards...). Power consumption targets for D-RAN of 400Watts at 100% load for 4T4R and 500 watts for 64T64R are indicated. These targets seem optimistic and poorly indicative of current vendors capabilities in that space.

O-RU:

The radio situation is still messy and my statements from last year still mostly stand: "While all operators claim highest urgent priority for a variety of Radio Units with different form factors (2T2R, 2T4R, 4T4R, 8T8R, 32T32R, 64T64R) in a variety of bands (B1, B3, B7, B8, B20, B28B, B32B/B75B, B40, B78...) and with multi band requirements (B28B+B20+B8, B3+B1, B3+B1+B7), there is no unanimity on ANY of these. This leads vendors trying to find which configurations could satisfy enough volume to make the investments profitable in a quandary. There are hidden dependencies that are not spelled out in the requirements and this is where we see the limits of the TIP exercise. Operators cannot really at this stage select 2 or 3 new RU vendors for an open RAN deployment, which means that, in principle, they need vendors to support most, if not all of the bands and configurations they need to deploy in their respective network. Since each network is different, it is extremely difficult for a vendor to define the minimum product line up that is necessary to satisfy most of the demand. As a result, the projections for volume are low, which makes the vendors only focus on the most popular configurations. While everyone needs 4T4R or 32T32R in n78 band, having 5 vendor providing options for these configurations, with none delivering B40 or B32/B75 makes it impossible for operators to select a single vendor and for vendors to aggregate sufficient volume to create a profitable business case for open RAN." This year, there is one configuration of high priority that has unanimous support: 4T4R B3+B1. The other highest priority configurations requested by a majority of operators are 2T4R B28B+B20+B8, 4T4R B7, B3+B1, B32B+B75B, and 32T32R B78 with various power targets from 200 to 240W.

Open Front Haul:

The Front Haul interface requirements only acknowledge the introduction of Up Link enhancements for massive MIMO scenarios as they will be introduced to the 7.2.x specification, with a lower priority. This indicates that while Ericsson's proposed interface and architecture impact is being vetted, it is likely to become an optional implementation, left to the vendor's s choice until / unless credible cost / performance gains can be demonstrated.

Transport:

Optical budgets and scenarios are now introduced.

RAN features:

Final MoU positions are now proposed. Unanimous items introduced in this version revolve mostly around power consumption and efficiency counters, KPIs and mechanisms. other new requirements introduced follow 3GPP rel 16 and 17 on carrier aggregation, slicing and MIMO enhancements.

Hardware acceleration:

a new section introduced to clarify the requirements associated with L1 and L2 use of look aside and inline. The most salient requirement is for multi RAT 4G/5G simultaneous support.

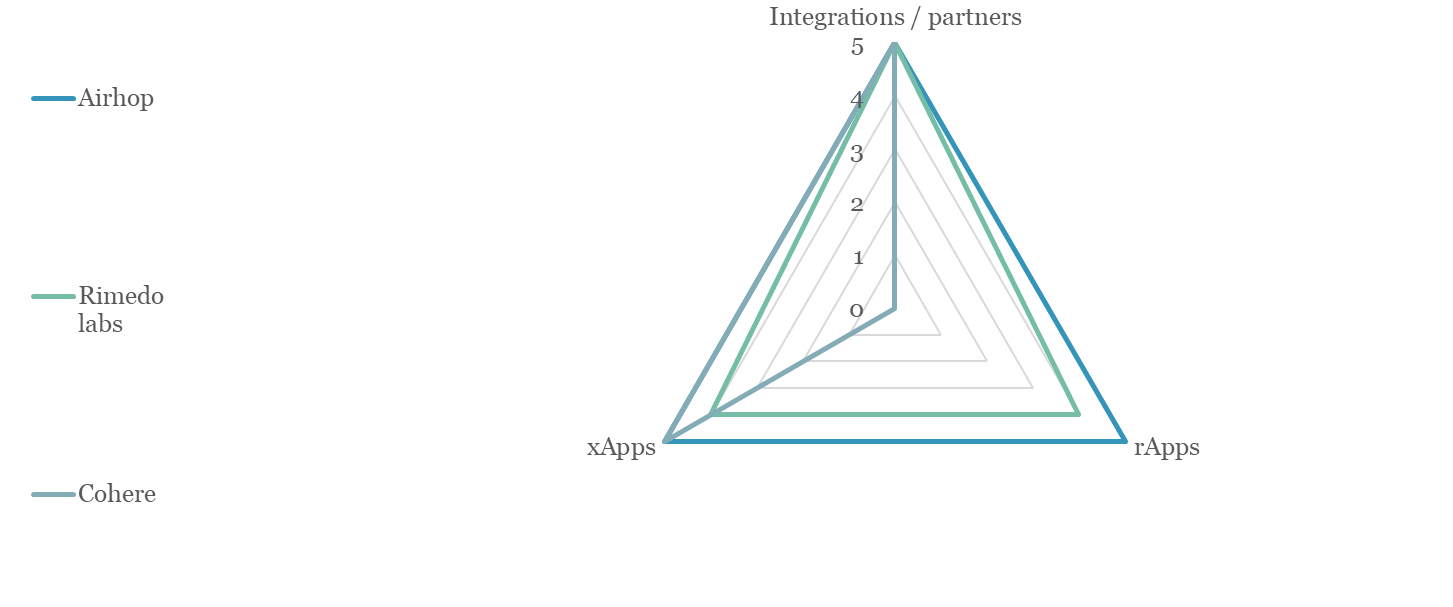

Near RT RIC:

The Near Real Time RIC requirements continue to evolve and be refined. My perspective hasn't changed on the topic. and a detailed analysis can be found here. In short letting third party prescribe policies that will manipulate the DU's scheduler is anathema for most vendors in the space and, beyond the technical difficulties would go against their commercial interests. operators will have to push very hard with much commercial incentive to see xapps from 3rd party vendors being commercially deployed.

E2E use cases:

End-to-end use cases are being introduced to clarify the operators' priorities for deployments. There are many but offer a good understanding of their priorities. Traffic steering for dynamic traffic load balancing, QoE and QoS based optimization, to optimize resource allocation based on a desired quality outcome... RAN sharing, Slice assurance, V2x, UAV, energy efficiency... this section is a laundry list of desiderata , all mostly high priority, showing here maybe that operators are getting a little unfocused on what real use cases they should focus on as an industry. As a result, it is likely that too many priorities result in no priority at all.

SMO

With over 260 requirements, SMO and non RT RIC is probably a section that is the most mature and shows a true commercial priority for the big 5 operators.

All in all, the document provides a good idea of the level of maturity of Open RAN for the the operators that have been supporting it the longest. The type of requirements, their prioritization provides a useful framework for vendors who know how to read them.

More in depth analysis of Open RAN and the main vendors in this space is available here.